In the expansive field of generative image modeling, the emergence of Stable Video Diffusion stands as a pivotal advancement, propelling the frontiers of text-to-video and image-to-video synthesis. This groundbreaking research, meticulously detailed in a paper authored by Stability-AI, introduces a novel latent video diffusion model. Within this paradigm, the paper illuminates the intricacies underlying its training methodologies, explores the nuances of data curation, and underscores its transformative influence on the industry.

At the heart of this transformative research lies the innovative concept of latent video diffusion. The Stability-AI paper not only introduces this model but delves deep into its core, unraveling the sophisticated mechanisms that drive its functioning. The exploration encompasses a comprehensive analysis of the model’s training methodologies, providing a nuanced understanding of how Stable Video Diffusion achieves its remarkable synthesis of video content from textual and visual inputs. The paper’s insights contribute not only to the theoretical understanding of latent video diffusion but also hold practical implications for the broader landscape of generative image modeling.

Stable Video Diffusion

The foundation of Stable Video Diffusion rests on diffusion models, which have shown prowess in synthesizing consistent frames from text or images. This paper builds upon existing latent diffusion models, converting them into potent video generators. The authors emphasize the importance of data selection and curation, a facet surprisingly overlooked in previous works, and draw parallels with successful strategies from the image domain.

Data Curation for HQ Video Synthesis

A key contribution of this research lies in its systematic approach to curating a large-scale video dataset. The authors outline three distinct stages for training generative video models: text-to-image pretraining, video pretraining on a large dataset at low resolution, and high-resolution video finetuning on a smaller dataset with high-quality videos. The paper introduces a detailed data processing and annotation workflow, involving cut detection, synthetic captioning, optical flow analysis, and aesthetic scoring.

Training Video Models at Scale

The authors provide insights into the training process at scale, using a curated dataset to train a robust base model. This base model, born out of Stable Diffusion 2.1, serves as a versatile platform for subsequent tasks, such as text-to-video, image-to-video, and frame interpolation. The research delves into the intricacies of finetuning and showcases the advantages of data curation in improving model performance.

High-Resolution Text-to-Video and Image-to-Video Models

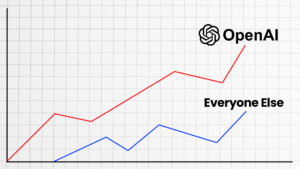

The paper demonstrates the prowess of Stable Video Diffusion in creating high-resolution text-to-video and image-to-video models. Through meticulous finetuning on datasets comprising millions of samples, the authors showcase the superior quality of their models compared to prior approaches. Human preference studies validate the visual superiority of the proposed models.

Camera Motion Control and Frame Interpolation

Stability-AI introduces innovations in camera motion control, embedding LoRA modules to enable controlled camera motions in image-to-video generation. Additionally, the paper explores frame interpolation, showcasing how Stable Video Diffusion can seamlessly predict intermediate frames to achieve smoother video playback at higher frame rates.

Multi-View Generation

In a groundbreaking exploration, the research extends Stable Video Diffusion into the realm of multi-view generation. Finetuning the model on datasets featuring synthetic 3D objects and household objects, the authors demonstrate its ability to generate consistent multi-view outputs, outperforming existing state-of-the-art models.

Conclusion

Stable Video Diffusion emerges as a formidable force in the domain of generative video modeling. The paper’s meticulous breakdown of training strategies, data curation methodologies, and the diverse applications of the model solidify its position as a pioneering contribution to the field. The research not only advances the capabilities of text-to-video and image-to-video synthesis but also lays the groundwork for future explorations in multi-view generation and beyond.

This comprehensive analysis serves as a guide for researchers and practitioners, unraveling the intricacies of Stable Video Diffusion and its potential to reshape the landscape of generative video models. The code and model weights released by Stability-AI further facilitate the adoption and exploration of this groundbreaking technology.

Note: The content is based on the research paper provided, and the clarity and comprehensiveness are intended to convey the key insights of the paper in a reader-friendly manner. For more information check out Stability AI’s website here.

To get more insight into what technology can be doing for your business, contact Epimax and follow us on social media.